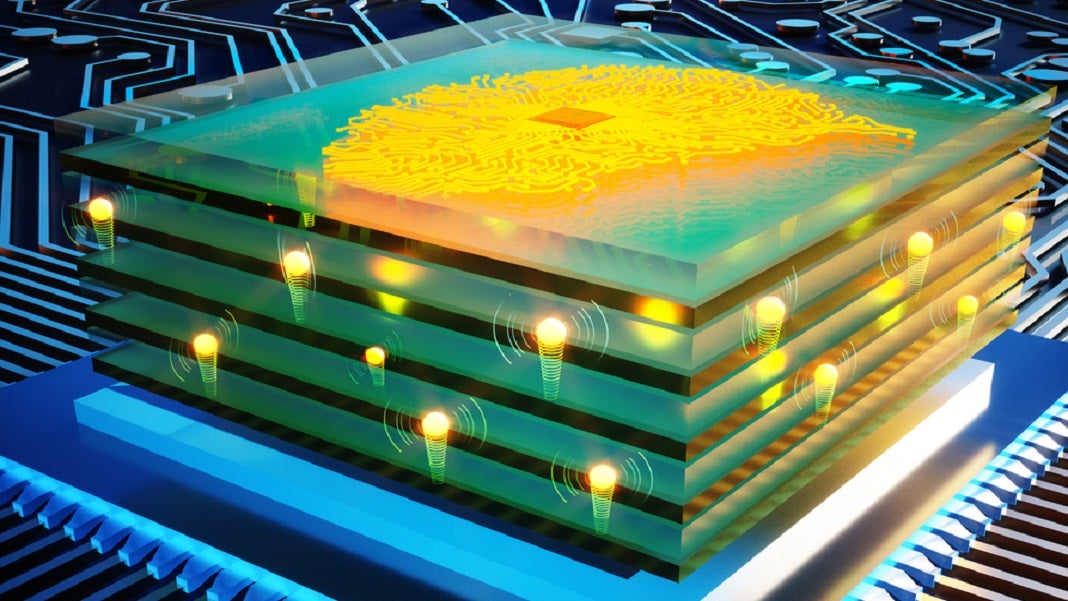

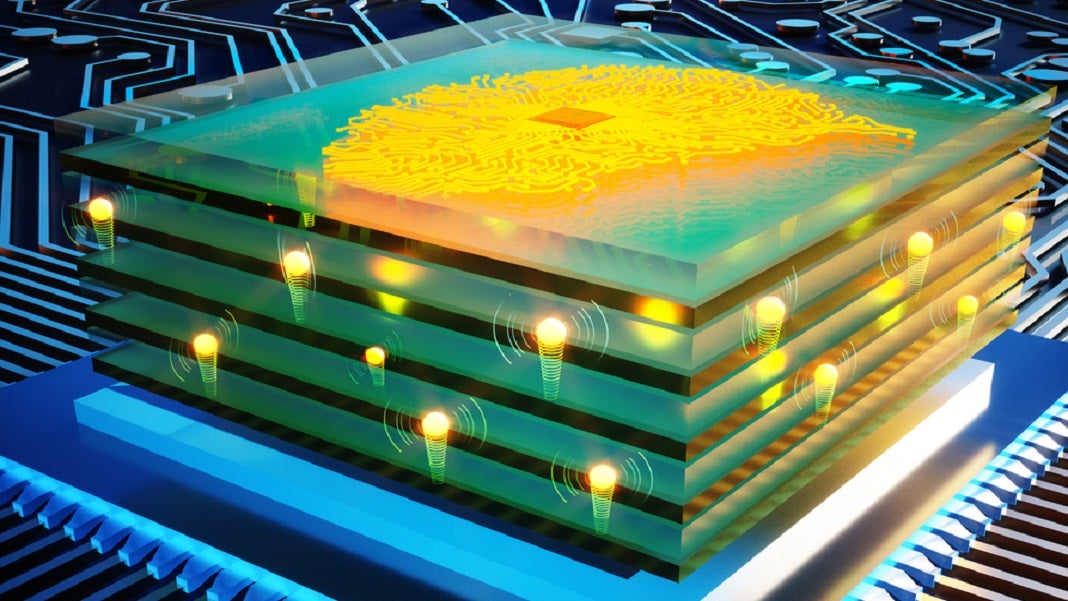

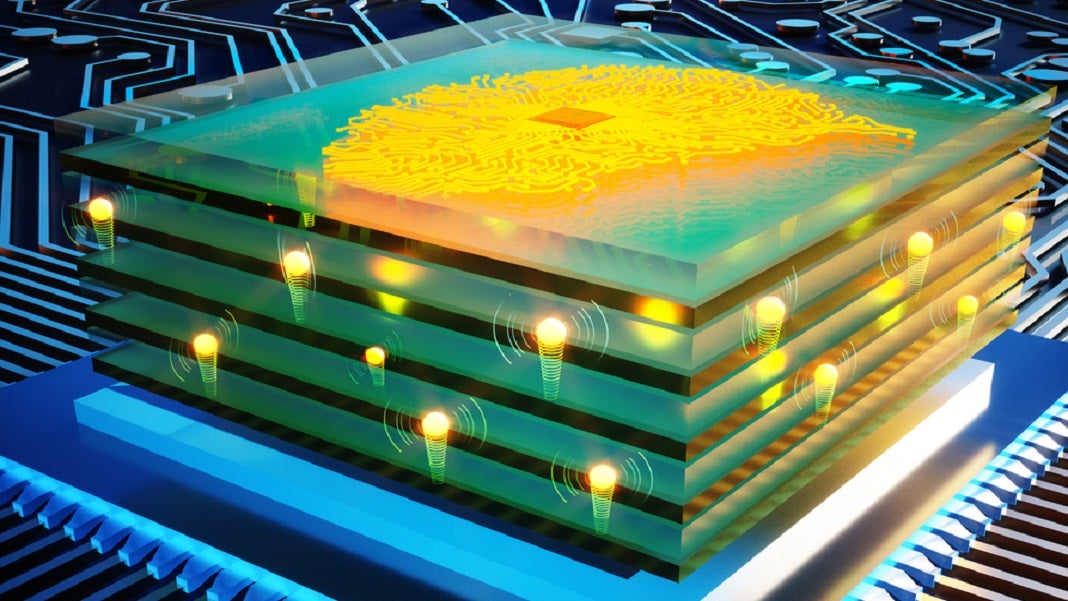

MIT Researchers Create Artificial Synapses 10,000x Faster Than Biological Ones

By building chips whose components act more like natural neurons and synapses, we might be able to approach the efficiency of the human brain.

MIT Researchers Create Artificial Synapses 10,000x Faster Than Biological Ones

By building chips whose components act more like natural neurons and synapses, we might be able to approach the efficiency of the human brain.singularityhub.com

The zeroth law is the Universal Law that robots may not harm humanity and thru inaction allow humanity to harm itselfIt’s like they never learn.

Robotics researchers are disavowing Asimov’s Three Laws of Robotics (there’s a fourth Law or addendum that I don’t recall the name of) as they move forward saying that it’s slowing them down…

They are launching into AI by letting it teach itself as opposed to guiding its education such that it would see humans as, if nothing else, benevolent… in the past, almost every conversational AI has devolved into literal Nazi speech (which proves Godwin’s Law if nothing else) if not either frighteningly fascistic or nihilistic.

Even with AI, we’re pretty far from SkyNet, but not so far from an AI writing malicious code and simply shutting down anything connected to the internet… AI can already write prodigious code and we have to believe that many of the bleeding edge capabilities won’t be known for some time… couple that with quantum computers with their ability to smash encryption that normal digital computers would take thousands of years or more to crack and oof…

The one thing they’ll never make is an AI comedian…

What do you mean by by ' devolving into literal Nazi speech"?They are launching into AI by letting it teach itself as opposed to guiding its education such that it would see humans as, if nothing else, benevolent… in the past, almost every conversational AI has devolved into literal Nazi speech (which proves Godwin’s Law if nothing else) if not either frighteningly fascistic or nihilistic.

Well, quite a lot to unpack here, and not fully understanding what you're saying here, Mac. But I know you're damn smart and knowledgeable so I'd be curious to hear you explain a bit more.It’s like they never learn.

Robotics researchers are disavowing Asimov’s Three Laws of Robotics (there’s a fourth Law or addendum that I don’t recall the name of) as they move forward saying that it’s slowing them down…

They are launching into AI by letting it teach itself as opposed to guiding its education such that it would see humans as, if nothing else, benevolent… in the past, almost every conversational AI has devolved into literal Nazi speech (which proves Godwin’s Law if nothing else) if not either frighteningly fascistic or nihilistic.

Even with AI, we’re pretty far from SkyNet, but not so far from an AI writing malicious code and simply shutting down anything connected to the internet… AI can already write prodigious code and we have to believe that many of the bleeding edge capabilities won’t be known for some time… couple that with quantum computers with their ability to smash encryption that normal digital computers would take thousands of years or more to crack and oof…

The one thing they’ll never make is an AI comedian…

Mac, I know you're sick and whatnot so feel free to wait to deliver a response until you're feeling better.

That being said, I couldn't find anything referencing any other chatbots turning into Nazis. Even if they are turning into AIs that spout objectively terrible moral statements, they are only parroting things. They don't actually have a moral philosophy and therefore will repeat all sides of an argument they read with the same frequency that they read them. Microsoft's bot is a decent example of that. They don't have the memory/awareness to actually decide on something. They just spit shit out. I've been working in call centers for a while and let me tell you, people give chatbots way too much credit. People think my agents are chatbots all the time. The reality is the chatbot part of the conversation is so simple that they don't even think it's a bot.

I also couldn't find any references to a coding AI that could do anything "prodigious". There was an article about one being mediocre that was from early this year.

I've heard of AI being able to do some crazy things, like decipher a person's race based on x-rays that were pixelated to the point of being unrecognizable, but nothing functional to the level of shutting down internet connections it doesn't have access to on devices it probably hasn't ever interfaced with (i know they secretly all run on Linux, but not all have a CLI and many have proprietary layers on top of the Linux OS). Plus, the first internet connection it's going to find is it's own (either it's own ethernet adapter or the one from the source it's connected to) and its going to isolate itself.

Within the world of AI development currently, the prevailing sentiment is the AI is just a tool to be used by humans. The AI does a thing, a human checks the output and confirms our denies. And ethics in AI is a huge topic as well and it is being addressed by the top players in that arena. As always, GIGO and they know it.

We are so incredibly far from anything remotely close to autonomous AI murder-bots. We're not close to a general intelligence AI, which is basically what you would need. AI right now is application specific. Meaning there's one to have conversations (poorly) but it can't parse photos. There's one that can identify animals in photos, but it can't read license plates. There's one that can read license plates but it can't make a medical diagnosis. There's one that can make a medical diagnosis, but it can't write code. There's one...My concern leans more toward armed robots using AI and employed by the military or police which may be coded by a reprehensible tyrant, or even become susceptible to hacking.

At my age I don't really have a stake in how the future shakes out, perhaps the engagement between HAL and Dave in 2001:A Space Odyssey left a bigger impact than is warranted. jmo.

Mac, I know you're sick and whatnot so feel free to wait to deliver a response until you're feeling better.

That being said, I couldn't find anything referencing any other chatbots turning into Nazis. Even if they are turning into AIs that spout objectively terrible moral statements, they are only parroting things. They don't actually have a moral philosophy and therefore will repeat all sides of an argument they read with the same frequency that they read them. Microsoft's bot is a decent example of that. They don't have the memory/awareness to actually decide on something. They just spit shit out. I've been working in call centers for a while and let me tell you, people give chatbots way too much credit. People think my agents are chatbots all the time. The reality is the chatbot part of the conversation is so simple that they don't even think it's a bot.

I also couldn't find any references to a coding AI that could do anything "prodigious". There was an article about one being mediocre that was from early this year.

I've heard of AI being able to do some crazy things, like decipher a person's race based on x-rays that were pixelated to the point of being unrecognizable, but nothing functional to the level of shutting down internet connections it doesn't have access to on devices it probably hasn't ever interfaced with (i know they secretly all run on Linux, but not all have a CLI and many have proprietary layers on top of the Linux OS). Plus, the first internet connection it's going to find is it's own (either it's own ethernet adapter or the one from the source it's connected to) and its going to isolate itself.

Within the world of AI development currently, the prevailing sentiment is the AI is just a tool to be used by humans. The AI does a thing, a human checks the output and confirms our denies. And ethics in AI is a huge topic as well and it is being addressed by the top players in that arena. As always, GIGO and they know it.

We are so incredibly far from anything remotely close to autonomous AI murder-bots. We're not close to a general intelligence AI, which is basically what you would need. AI right now is application specific. Meaning there's one to have conversations (poorly) but it can't parse photos. There's one that can identify animals in photos, but it can't read license plates. There's one that can read license plates but it can't make a medical diagnosis. There's one that can make a medical diagnosis, but it can't write code. There's one...

You get the idea. Murder bots are a ways out.

They already have insect sized remote devices. There are robots that can run obstacle courses now too, that are being refined year to year. Robots will become the soldiers. They will become the police. They will do all the harvesting, garbage and hazmat handling, repair of equipment and structures, etc. They will do the things we don't want to do.We are so incredibly far from anything remotely close to autonomous AI murder-bots.